Theoretical Aspects of Lexical Analysis

From Wiki**3

Lexical analysis, the first step in the compilation process, splits the input data into segments and classifies them. Each segment of the input (a lexeme) will be assigned a label (the token).

In this case, we will be using regular expressions for recognizing portions of the input text.

Regular Expressions

Regular expressions are defined considering a finite alphabet Σ = { a, b, ..., c } and the empty string ε:

The languages (sets of strings) for each of these entities are:

- {ε}, for ε

- {a}, for an entry a in Σ

The following primitive constructors are defined:

- concatenation

- alternative

- Kleene-star (*)

Extensions (derived from the above):

- Transitive closure (+) - a+ ("one or more 'a'")

- Optionality (?) - a? ("zero or one 'a'")

- Character classes - [a-z] ("all chars in the 'a-z' range" - only one character is matched)

Recognizing/Matching Regular Expressions: Thompson's Algorithm

Since we are going to use sets of regular expressions for recognizing input strings, we need a way of implementing that functionality. The recognition process can be efficiently carried out by finite state automata that either accept of reject a given string.

Ken Thompson, the creator of the B language (one of the predecessors of C) and one of the creators of the UNIX operating system, devised the algorithm that carries his name and describes how to build an acceptor for a given regular expression.

Created for Thompson's implementation of the grep UNIX command, the algorithm creates an NFA from a regular expression specification that can then be converted into a DFA. It is this DFA that after minimization yields an automaton that is an acceptor for the original expression.

The following sections cover the algorithm's construction primitives and how to recognize a simple expression. Lexical analysis such as performed by flex requires several expressions to be watched for, each one corresponding to a token. Such automatons feature multiple final states, one or more for each recognized expression.

Building the NFA: Thompson's Algorithm

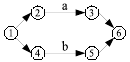

Thompson's algorithm is based on a few primitives, as show in the following table:

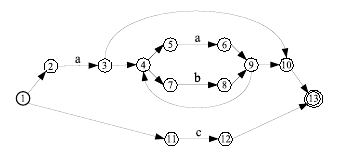

Complex expressions are built from these primitives. The following diagram corresponds to the expression a(a|b)∗|c (note how the Kleene-star operator affects an alternative group):