Theoretical Aspects of Lexical Analysis

From Wiki**3

Lexical analysis, the first step in the compilation process, splits the input data into segments and classifies them. Each segment of the input (a lexeme) will be assigned a label (the token).

In this case, we will be using regular expressions for recognizing portions of the input text.

Regular Expressions

Regular expressions are defined considering a finite alphabet Σ = { a, b, c, ... } and the empty string ε:

The languages (sets of strings) for each of these entities are:

- {ε}, for Σ

- {a}, for an entry a in Σ

The following primitive constructors are (informally) defined:

- concatenation: ab ("a followed by b")

- alternative: a|b ("a or b")

- Kleene-star (*): a* ("zero or more a")

Extensions (derived from the above):

- Transitive closure (+) - a+ ("one or more a")

- Optionality (?) - a? ("zero or one a")

- Character classes - [a-z] ("all chars in the a-z range" - only one character is matched)

Other:

- Begining: ^ (^abc means "abc at the beginning of the string")

- End: $ (abc$ means "abc at the end of the string")

- Character class negation: [^ ..... ] (e.g., [^a-z] means "any character except a lower-case letter between a and z")

Recognizing/Matching Regular Expressions: Thompson's Algorithm

Since we are going to use sets of regular expressions for recognizing input strings, we need a way of implementing that functionality. The recognition process can be efficiently carried out by finite state automata that either accept of reject a given string.

Ken Thompson, the creator of the B language (one of the predecessors of C) and one of the creators of the UNIX operating system, devised the algorithm that carries his name and describes how to build an acceptor for a given regular expression.

Created for Thompson's implementation of the grep UNIX command, the algorithm creates an NFA from a regular expression specification that can then be converted into a DFA. It is this DFA that after minimization yields an automaton that is an acceptor for the original expression.

The following sections cover the algorithm's construction primitives and how to recognize a simple expression. Lexical analysis such as performed by flex requires several expressions to be watched for, each one corresponding to a token. Such automatons feature multiple final states, one or more for each recognized expression.

Building the NFA: Thompson's Algorithm

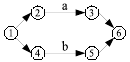

Thompson's algorithm is based on a few primitives, as show in the following table:

Complex expressions are built from these primitives. The following diagram corresponds to the expression a(a|b)*|c (note how the Kleene-star operator affects an alternative group):

Building DFAs from NFAs

NFAs are not well suited for computers to work with, since each state may have multiple acceptable conditions for transitioning to another state (searching and backtracking would be needed for directly using an NFA). Thus, it is necessary to transform the automaton so that each state has a single transition for each possible condition. This process is called determination. The algorithm for transforming an NFA into a DFA is a simple one and relies on two primitive functions, move and ε-closure.

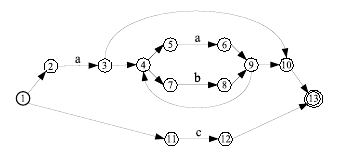

The move function is defined over a set of NFA states and input symbol pairs and a set of NFA states sets: for each state and input symbol, it computes the set of reachable states. As an example consider, for the NFA in the previous automaton:

- move({2}, a) = {3}

- move({5}, a) = {6}

- move({11}, a) = {}

The ε-closure function is defined for sets of states: the function computes a new set of states reachable from the initial set by using only all the possible ε transitions to other states (including each state itself), as well as the states reachable through transitions from those states. Thus, considering the previous NFA, we could write:

- ε-closure({1}) = {1, 2, 11}

- ε-closure(move({2}, a)) = ε-closure({3}) = {3, 4, 5, 7, 10, 13}

With the two above functions we can describe a determination algorithm. The input for the determination algorithm is a set of NFA states and their corresponding transitions; a distinguished start state and a set of final states. The output is a set of DFA states (as well as the configuration of NFA states corresponding to each DFA state); a distinguished start state and a set of final states.

The algorithm considers an agenda containing pairs of DFA states and input symbols. Each pair corresponds to a possible transition in the DFA (possible in the sense that it may not exist). Each new state, obtained from considering successful transitions from agenda pairs, must be considered as well with each input symbol. The algorithm ends when no more pairs exist in the agenda and no more can be added.

DFA states containing in their configurations final NFA states are also final.

Step 1: Compute the ε-closure of the NFA's start state. The resulting set will be the DFA's start state, I0. Add all pairs (I0, α) (∀α∈Σ, with Σ the input alphabet) to the agenda.

Step 2: For each unprocessed pair in the agenda (In, α), remove it from the agenda and compute ε-closure(move(In, α)): if the resulting configuration, In+1, is not a known one (i.e., it is different from all Ik, ∀k<n+1), add the corresponding pairs to the agenda.

Step 3: Repeat step 2 until the agenda is empty.

The algorithm's steps can be tabled (see below): Σ = {a, b, c} is the input alphabet; α ∈ Σ is an input symbol; and In+1 = ε-closure(move(In, α)). Numbers in bold face correspond to final states

| In | α∈Σ | move(In, α) | ε-closure(move(In, α)) | In+1 = ε-closure(move(In, α)) |

|---|---|---|---|---|

| - | - | 1 | 1, 2, 11 | 1 |

| 1 | a | 3 | 3, 4, 5, 7, 10, 13 | 2 |

| 1 | b | - | - | - |

| 1 | c | 12 | 12, 13 | 3 |

| 2 | a | 6 | 4, 5, 6, 7, 9, 10, 13 | 4 |

| 2 | b | 8 | 4, 5, 7, 8, 9, 10, 13 | 5 |

| 2 | c | - | - | - |

| 3 | a | - | - | - |

| 3 | b | - | - | - |

| 3 | c | - | - | - |

| 4 | a | 6 | 4, 5, 6, 7, 9, 10, 13 | 4 |

| 4 | b | 8 | 4, 5, 7, 8, 9, 10, 13 | 5 |

| 4 | c | - | - | - |

| 5 | a | 6 | 4, 5, 6, 7, 9, 10, 13 | 4 |

| 5 | b | 8 | 4, 5, 7, 8, 9, 10, 13 | 5 |

| 5 | c | - | - | - |

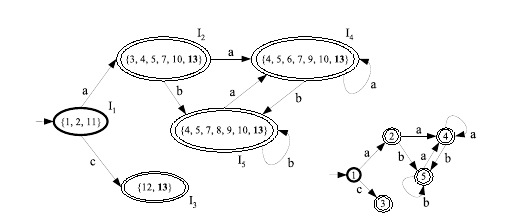

The graph representation of the DFA computed in accordance with the determination algorithm is presented below (the right part of the figure presents a simplified view). The numbers correspond to DFA states whose NFA state configurations are presented above.

DFA Minimization

The compaction process is simply a way of eliminating DFA states that are unnecessary. This may happen because one or more states are indistinguishable from each other, given the input symbols.

A simple algorithm consists of starting with a set containing all states and progressively dividing it according to various criteria: final states and non-final states are fundamentally different, so the corresponding sets must be disjoint; states in a set that have transitions to different sets, when considering the same input symbol are also different; states that have transitions on a given input symbol are also different from states that do not have those transitions. The algorithm must be applied until no further tests can be carried out.

Regarding the above example, we would have the following sets:

- All states: A = {1, 2, 3, 4, 5}; separating final and non-final states we get

- Final states, F = {2, 3, 4, 5}; and non-final states, NF = {1};

- Considering a and F: 2, 4, and 5 present similar behavior (all have transitions ending in states in the same set, i.e., 4); 3 presents a different behavior (i.e., no a transition). Thus, we get two new sets: {2, 4, 5} and {3};

- Each new partition must now be reassessed;

- Considering both a and b and {2, 4, 5}, we reach the conclusion that all states are indistinguishable from each other;

- Since {2, 4, 5} has no c transitions, it remains as is. Since all other sets are singular, the minimization process stops.

The following figure presents the process of minimizing the DFA (the starting point is the simplified view in the previous figure), in the form of a minimization tree (right part of the figure -- note that the lower edge label should read "a,b,c"):

Input Processing

After producing the minimized DFA, we are ready to process input strings and decide whether or not they accepted by the regular expression. The analysis process uses a table for keeping track of the analyzer's current state as well as of the transitions when analyzing the input. The analysis process ends when there is no input left to process and the current state is a final state. If the input is empty and the state is not final, then there was an error and the string is said to be rejected. If there is no possible transition for a given state and the current input symbol, then processing fails and the string is also rejected.

Recognizing Multiple Expressions

A lexical analyzer is an automaton that, in addition to accepting or rejecting input strings (as seen above), also assigns an identifier to the expression that matched the input. This identifier is known as token.

Building lexical analyzers is a simple matter of composing multiple analyzers for the component regular expressions. However, final states corresponding to different expressions must be kept separate. Other than this restriction, the process of building the DFA is the same as before: first the NFA is built according to Thompson's algorithm and the corresponding DFA minimized. The minimization process accounts for another slight difference: after separating states according to whether they are final or non-final, final states must be divided into sets according to the expressions they recognize.

The construction process

The following example illustrates the construction process for a lexical analyzer that identifies three expressions: G = {a*|b, a|b*, a*}. Thus, the recognized tokens are TOK1 = a*|b, TOK2 = a|b*, and TOK3 = a*. Note that the construction process handles ambiguity by selecting the token that consumes the most input characters and, if two or more tokens match, by selecting the first. It may possible that the lexical analyzer never signals one of the expressions: in an actual situations, this may be undesirable, but may be unavoidable. For instance, when recognizing identifiers and keywords, care must be exercised so as not to select an identifier when a keyword is desired.

Thompson's Algorithm and the NFA

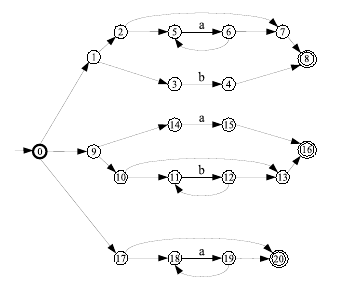

The following figure shows the NFA for the lexical analyzer for G = {a*|b, a|b*, a*}.

The following table shows the determination table for G = {a*|b, a|b*, a*}. As before, I0 = ε-closure({0}) and In+1 = ε-closure(move(In, α)), α ∈ Σ. Final states are marked in bold.

| In | α∈Σ | move(In, α) | ε-closure(move(In, α)) | In+1 = ε-closure(move(In, α)) | Token |

|---|---|---|---|---|---|

| - | - | 0 | 0, 1, 2, 3, 5, 7, 8, 9, 10, 11, 13, 14, 16, 17, 18, 20 | 0 | TOK1 |

| 0 | a | 6, 15, 19 | 5, 6, 7, 8, 15, 16, 18, 19, 20 | 1 | TOK1 |

| 0 | b | 4, 12 | 4, 8, 11, 12, 13, 16 | 2 | TOK1 |

| 1 | a | 6, 19 | 5, 6, 7, 8, 18, 19, 20 | 3 | TOK1 |

| 1 | b | - | - | - | - |

| 2 | a | - | - | - | - |

| 2 | b | 12 | 11, 12, 13, 16 | 4 | TOK2 |

| 3 | a | 6, 19 | 5, 6, 7, 8, 18, 19, 20 | 3 | TOK1 |

| 3 | b | - | - | - | - |

| 4 | a | - | - | - | - |

| 4 | b | 12 | 11, 12, 13, 16 | 4 | TOK2 |

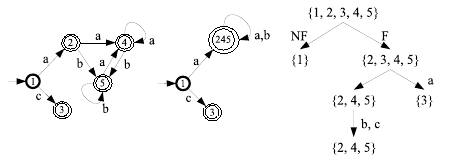

As this table clearly illustrates, all DFA states are final: each of them contains, at least, one final NFA state. When several final NFA states are present, the first is the one considered. In this way, we are able to select the first expression in the list, when multiple matches would be possible. Note also that the third expression is never matched. This expression corresponds to state 20 in the NFA: in the DFA this state never occurs by itself, meaning that the first expression is always preferred (as expected).

The DFA and the Minimized DFA

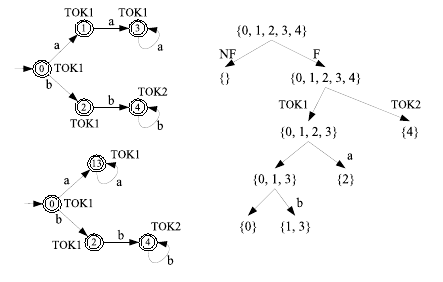

The minimization process is as before, but now we have to take into account that states may differ only with respect to the expression they recognize. Thus, after splitting states sets into final and non-final, the set of final states should be split according to the recognized expression. From this point on, the procedure is as before.

The following figure shows the DFA for a lexical analyzer for G = {a*|b, a|b*, a*}: the original (top left), minimized (bottom left), and minimization tree (right). Note that states 2 and 4 cannot be merged since they recognize different tokens.

After the last analysis, set {1,3} should be analysed again against the symbols in the alphabet. This is left as an exercise (result: the set is not divisible).

The Analysis Process and Backtracking

The following figure shows the process of analyzing the input string aababb. As can be seen from the table, several tokens are recognized and, for each one, the analyzer returns to the initial state to process the remainder of the input. Symbol $ marks the end of the input: when it is reached, the automaton must be in a final state for the input to be accepted.

| In | Input | In+1 / Token |

|---|---|---|

| 0 | aababb$ | 13 |

| 13 | ababb$ | 13 |

| 13 | babb$ | TOK1 |

| 0 | babb$ | 2 |

| 2 | abb$ | TOK1 |

| 0 | abb$ | 13 |

| 13 | bb$ | TOK1 |

| 0 | bb$ | 2 |

| 2 | b$ | 4 |

| 4 | $ | TOK2 |

The input string aababb is, after 10 steps, split into four tokens: TOK1 (corresponding to lexeme aa), TOK1 (b), TOK1 (a), and TOK2 (bb).

Backtracking occurs when the automaton cannot proceed from a non-terminal state: in this case, any symbols already considered (since the last seen final state), must be returned to the input, and the token associated with the last seen final state must be signaled before restarting processing of the remaining input string (see Exercise 6 below).

Exercises

Acceptors

For each regular expression, compute the non-deterministic finite automaton (NFA) by using Thompson's algorithm. In each case, compute the minimal deterministic finite automaton (DFA).

The alphabet is Σ = { a, b } in all cases.

- Exercise 1: (a|b)*

- Exercise 2: (a*|b*)*

- Exercise 3: ((ε|a)b)*

- Exercise 4: (a|b)*abb(a|b)*

Multi-token recognition: lexical analyzers

For each ordered sequence, compute the non-deterministic finite automaton (NFA) by using Thompson's algorithm. In each case, compute the minimal deterministic finite automaton (DFA).

The alphabet is Σ = { a, b } in all cases. Indicate the number of processing steps for each input string.

- Exercise 5: G = { ab, ab*, a|b }, input string = abaabb

- Exercise 6: G = { aa, aaaa, a|b }, input string = aaabaaaaa

Exercises (set 2)

- Exercise 7: G = { a*|b, a*, b*|a }, input string = aababb

- Exercise 8: G = { a*, a*|b, a|b* }, input string = aababb

- Exercise 9: G = { a*, ba*, a|b* }, input string = aababb

- Exercise 10: G = { a*|b, ba*, b* }, input string = aababb

- Exercise 11: G = { a*b, a*, b*a }, input string = aababb

- Exercise 12: G = { b*|a, a*, b|a* }, input string = aababb

- Exercise 13: G = { a*|b, a|b*, a* }, input string = aababb

- Exercise 14: G = { a*|b, a|b*, b* }, input string = aababb

- Exercise 15: G = { a*|c, a|b*, bc* }, input string = aaabcacc

- Exercise 16: G = { bc*, a*|c, a|b* }, input string = aaabcacc

- Exercise 17: G = { a*, ba*, a*|b }, input string = aababb

- Exercise 18: G = { ab*, (a|c)*, bc* }, input string = abbcac

- Exercise 19: G = { a*|b, b*|c, c*|a }, input string = abbcac

- Exercise 20: G = { a|b*, b|c*, a*|c }, input string = abbcac

- Exercise 21: G = { ab*, b|c*, a*|c }, input string = abbcac

- Exercise 22: G = { a|b*, bc*, a*|c }, input string = abbcac

- Exercise 23: G = { a|b*, b|c*, a*c }, input string = abbcac

- Exercise 24: G = { a|b*, bc*, a|c* }, input string = abbcac

- Exercise 25: G = { a*|b, b*c, (a|c)* }, input string = abbcac