Week 7 (3rd Period)

- António Morais

- Catarina Caramalho

- João Pinheiro

- Tiago Teixeira

- 3rd Period - Weekly Progress

- March 31, 2024

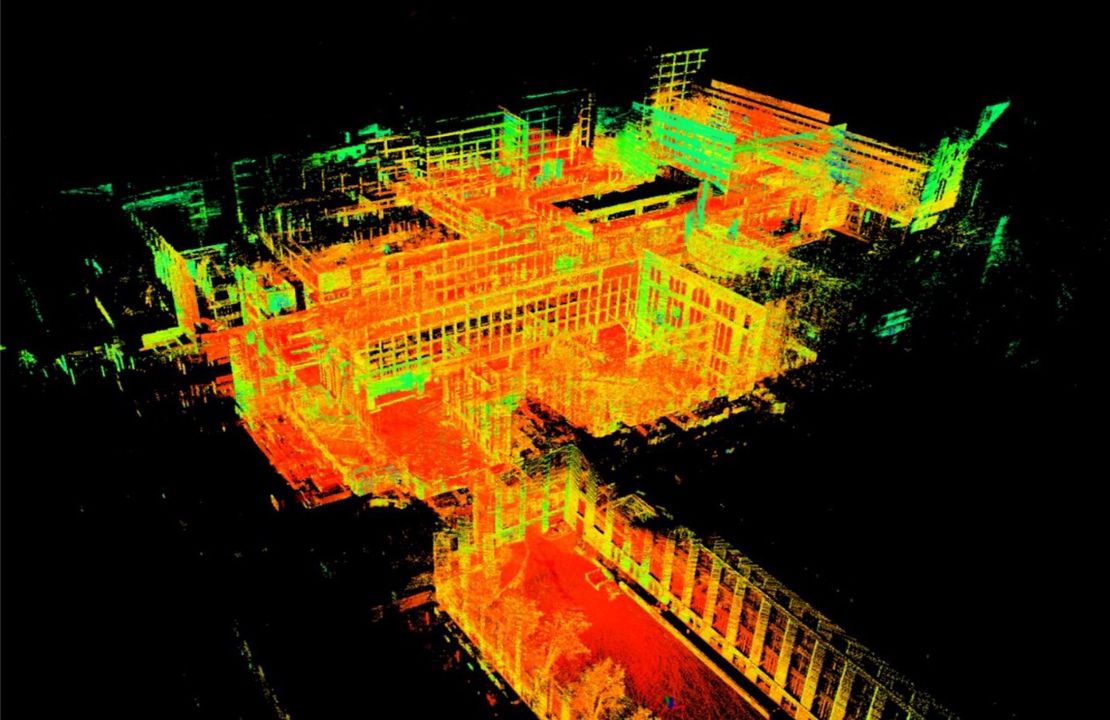

3D Mapping

→ LIO-SAM

During this week, the team delved into testing the LIO-SAM algorithm, but a problem arose from the need for synchronization of the Inertial Measurement Unit (IMU) and LiDAR. This process involves determining matrices and parameters to synchronize both components so that the algorithm works properly. The synchronization process is explained in detail on the Github repository of LIO-SAM , but it can also be understood by viewing the following images provided by the authors.

In simulation, the students encountered some setbacks as they initially believed they would need to make changes to perform the process illustrated above. Consequently, they decided to attempt implementing the algorithm on the TIAGo robot. However, another issue surfaced when it was discovered that the IMU initially considered for use is not compatible with the algorithm itself. The SLAM method under discussion requires a 9 degrees of freedom IMU, whereas the IMU available on the TIAGo only has 6 degrees of freedom.

Following this discovery, the team identified a solution and began working with the Jackal robot from the Institute For Robotics and Systems (ISR) to test the algorithm. This decision was made because the robot features a 9 degrees of freedom IMU and even a Velodyne LiDAR , which is the LiDAR used by the author of the algorithm during development.

Subsequently, the team attempted to synchronize the components but did not succeed, and did not make much further progress this week.

3D Localization

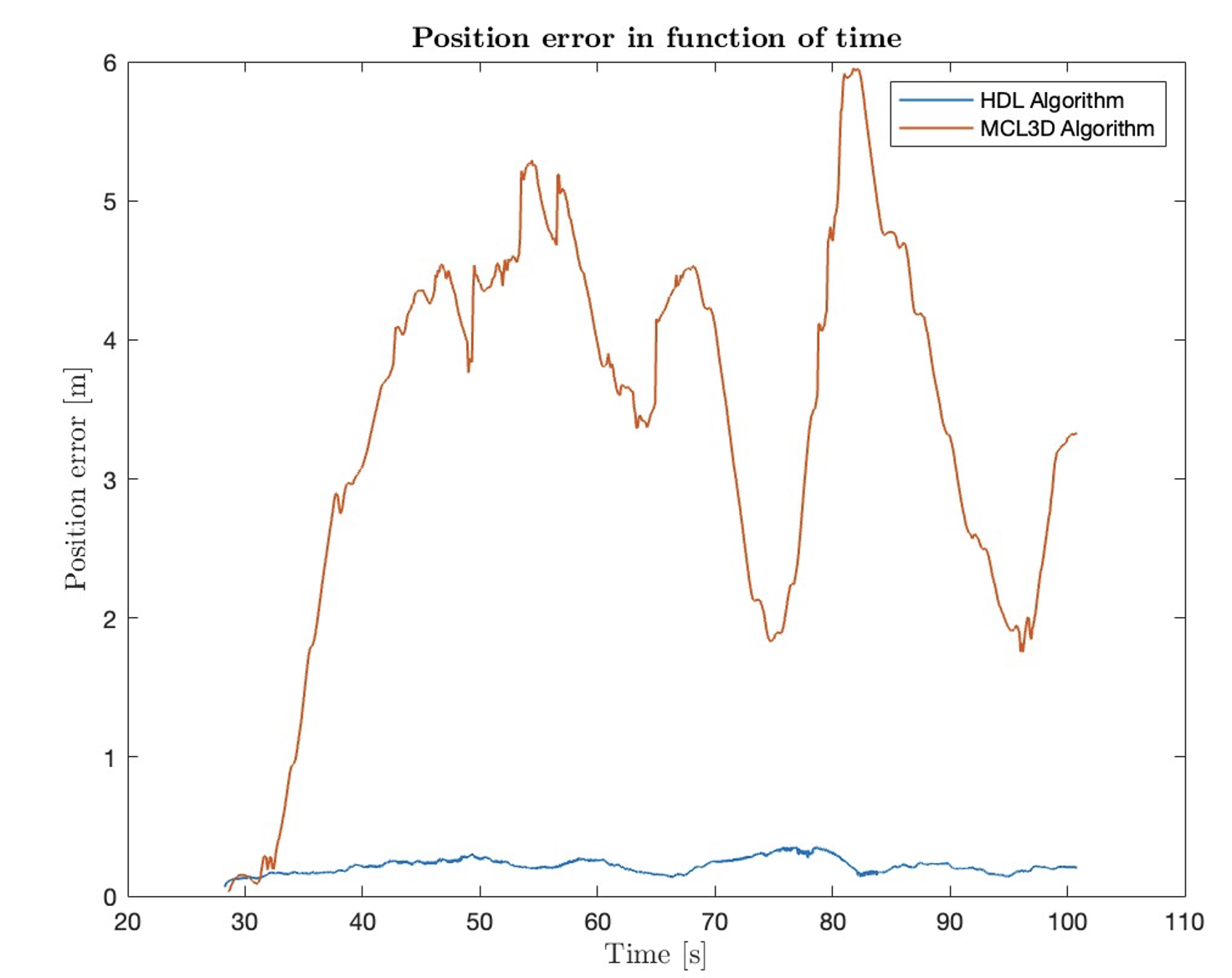

After last week advances, the team developed and ran a Python script, during the execution of both algorithms. The aim of this script is to calculate the localization and orientation errors of both algorithms in order to make a numerical comparison of them. The plots of the position errors as a function of time and the orientation errors as a function of time are the following:

Note that the time does not start at zero seconds, because the time that is counted is the ROS time, and the error script is one of the last to be launched.

As can be seen in the plots, the maximum position error for the HDL is 0.35m, which contrasts with the maximum of 6m achieved by the MCL3D. With regard to orientation errors, the highest peak was 0.11rad for the HDL and 3.1rad for the MCL3D. We can therefore conclude that HDL is indeed the best algorithm to use in simulation. However, this is not a sufficient condition for it to be the best algorithm to be implemented on the real robot. During a real test, the inaccuracy of the IMU is higher than that of the simulation, which can improve the performance of MCL3D. On the other hand, HDL doesn’t take into account the conditions of the real environment as much, so the theoretical results can be opposite to the real ones. This is why it is essential to test both algorithms in simulated and real environments.

Also it is important to refer that the result of the plot, was between -2π and 2π, and we had to normalize it to be between 0 and 2π, due to the physical constraints of the robot.

After getting the results of the plots, and analyze them carefully, the team decided to experiment both algorithms in the robot, but due to some technical problems, with the robot, we were not able to try them. We are looking forward to do so in the next couple of weeks.

2D Path Planning/Guidance

Last week, we began modifying the code of the poincloud_to_laserscan node , but we ran into some errors that prevented us from calculating the robot’s height based on the transform from the head frame to the base frame. Fortunately, we managed to resolve these issues and accurately calculate the height value. The next step was to determine how frequently we wanted to update this value, and we decided on a frequency of 1 Hz for now.

As shown in the gif below, our modifications to the node had the desired effect. As we extended the robot’s torso, the value for the maximum_height variable changed in real time.