Making Rustup Concurrent - The GSoC Wrap-Up

Spending a summer contributing for The Rust Project

Typical summers are filled with trips to the beach – at least along the Portuguese coast. However, this summer was a little different, as I decided to change the sand and scorching sun for contributing to Rustup!

It all started my master’s thesis. In September of 2024, in conversations with my supervisor, Prof. Nuno Lopes, the topic of automatic translation from C++ to Safe Rust came up. Even though I hadn’t written much Rust code until then, I quickly became hooked on its elegance and inner workings. What really stood out to me the most was the whole ecosystem that surrounds Rust and how it builds such a pleasant experience for developers. For me, the main reason behind that experience was Rustup. Pairing my appreciation for Rust’s toolchain manager with the urge of wanting to learn how to write more idiomatic Rust and delve in a large codebase, the idea of applying to this GSoC project was born.

My first performance improvement

At the end of May, with my community bonding period coming to an end (which was very fruitful) it was time to begin my journey toward making Rustup faster.

As planned with my mentor, @rami3l to which I am deeply grateful, the first step was to introduce concurrency in a minimally intrusive way.

As such, I started by picking up a two-year-old issue that required concurrency.

Although my PR took some time to be developed and merged, it was a great experience as I could learn a lot in the review process (thanks to @djc for his clear and rapid responses).

This sky-rocketed my motivation as I could see my first performance improvements; rustup check was now running 3.3 times faster. (A detailed post about this adventure is available here.)

From this PR I also learned an important lesson: the value of small, incremental PRs. Allowing large changes to accumulate only makes the review process more difficult, burdens the reviewers, and ultimately delays the merge. To avoid making PR any longer and repeat my mistake, I did a follow-up PR which can be seen here.

Is Rustup prepared to report status concurrently?

At that time, Rustup was not yet capable of outputting its status concurrently.

This posed a challenge, as my planned changes implied this.

Learning from the previous experience, I decided to break the task into smaller steps, beginning with refactoring Rustup’s progress tracking to use indicatif, a concurrency-ready library.

In fact, this issue had already been raised, by the then team lead of Rustup, @rbtcollins.

Undertaking this refactor was intimidating, as it was not merely a bug fix but a potentially breaking change that could have introduced errors (fortunately, it did not 😄) This change only needed two PRs afterwards to do some housekeeping, namely #4447 and #4460. With this refactor complete, Rustup was ready for me to start adding concurrency to toolchain installations.

My internet is slow. Can I install a toolchain faster?

Yes, indeed you can. For slower internet connections (e.g. 20MBps or less) waiting for one component to download, before starting the next is a waste of time. To improve this experience for such users I submitted PR #4436. This PR allows components to be downloaded concurrently rather than sequentially, which can provide up to a 1.5x speedup. Moreover, as discussed in this thread, the number of concurrent downloads should be configurable by the user. This is due to the fact that, for high speed connections, concurrent downloads do not provide a meaningful performance improvement and can introduce unnecessary overhead.

To make this change effective for all users, a new environment variable was added to Rustup: RUSTUP_CONCURRENT_DOWNLOADS.

This variable allows a user to set the number of components to be downloaded concurrently – allowing machines with high internet speed connections to keep their performance, while allowing machines with a slower internet connection, to benefit from this.

The default value for this variable was set to 2 (see the relevant discussion here), which was sufficient to improve performance on slower connections without affecting the experience of other users.

Although this change does not appear to be advantageous to most Rust users, it laid the groundwork for enhancing performance for all users, regardless of internet connection quality.

My internet is fast, can I also have faster installations?

Yes, of course. In addition to concurrent downloads, which may only benefit a limited set of users, this GSoC project includes another feature: interleaved installations. Before diving into this, it is important to highlight that Rustup’s previous modus operandi involved downloading all components sequentially and only proceeding with installations after all downloads had completed – also sequentially. This created an unnecessary (and constant) delay for users, who had to wait for all downloads to finish before seeing any components start installing.

With this motivation in mind, I submitted a new PR aimed at providing a considerable speedup for the rustup toolchain install command – and consequently rustup update – by allowing installations to begin while components are still being downloaded.

In a nutshell, instead of waiting for every download to complete, a component can be installed as soon as its download finishes.

Note that installations still remain sequential: when one component is being installed, other components that have already been downloaded must wait until that installation completes. Installing components concurrently was beyond the scope of this project, as it would not provide as significant an improvement as the changes described above. Nevertheless, I leave this change on my roadmap to be investigated with the community if it is worthwhile.

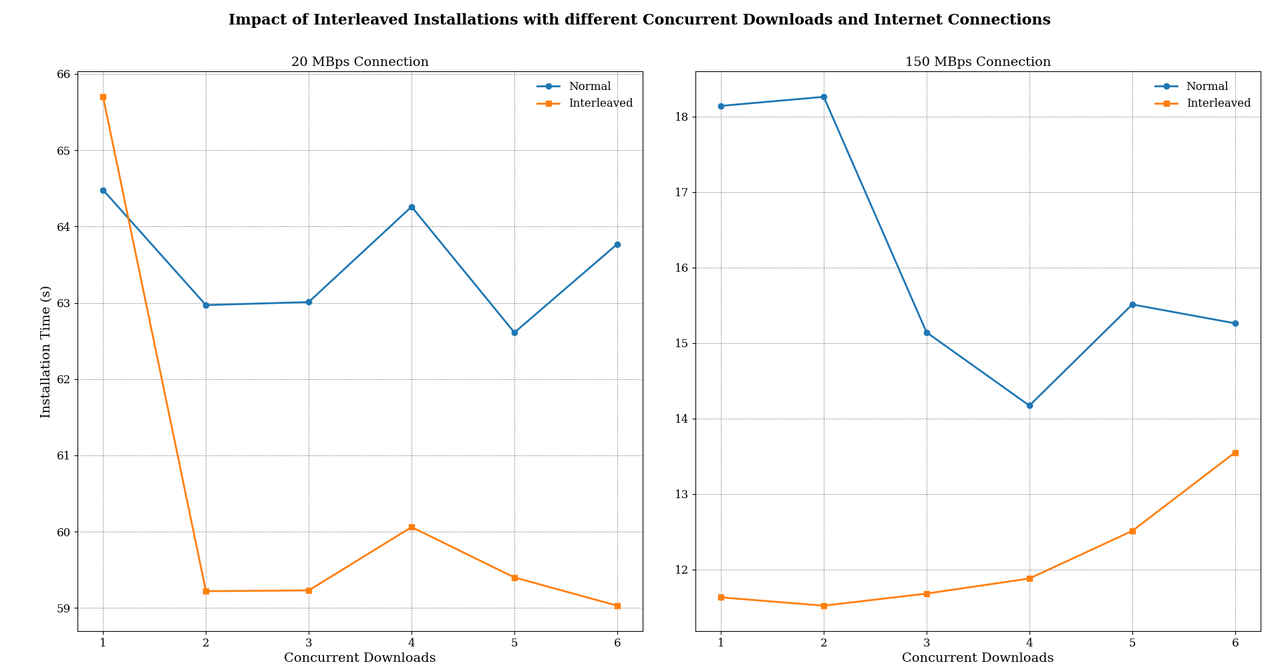

With interleaved installations, by the time all downloads are completed, most installations are already finished, vastly reducing the constant delay previously experienced after downloading components. To illustrate the impact of this change, I ran several benchmarks (specifications available here), which are presented below:

Before analyzing the plots, it is important to highlight that the y axis does not start at zero and the plots cannot be directly compared to one another. With that clarified, we can observe that interleaved installations do not introduce any overhead – except in the case of a slow internet connection combined with sequential downloads.

These results are very encouraging!

The previous decision to set RUSTUP_CONCURRENT_DOWNLOADS with a default value of 2 appears to have been a good choice, as this configuration yields the best performance.

Furthermore, for fast internet connections, even with just two concurrent downloads, we can reduce installation time by a little over six seconds, representing a 1.5x speedup.

It is important to note that these benchmarks were conducted on a high-spec machine equipped with an Intel Xeon E5-2630 v2 processor and 64 GB of RAM. On lower-spec machines, the speedup could be even greater, since extracting downloaded components may take longer; when combined with interleaved downloads, this can lead to an even larger performance improvement.

What did I actually achieve?

In conclusion, this GSoC project resulted in three main improvements: (1) a refactoring of the UX regarding toolchain installations, (2) enabling users to download (any number of) components concurrently, and (3) installing components as soon as they are downloaded. Additionally, I encountered this issue, that I experienced firsthand, and decided to address it, successfully getting one more PR merged!

Conclusions

This was a fantastic project! I have learned a lot during this summer, and my appreciation for open source software – and for Rust in particular – has grown even stronger. I am incredibly grateful for this opportunity and deeply indebted to my mentor, rami3l, for his patience and guidance throughout this journey.

Looking ahead, I do not intend to close this chapter on contributing to Rust any time soon.

There are still several contributions that I plan to make: (a) investigating whether Rustup can automatically alert users that an update is available (history here and here) – without implying any unwanted overhead; (b) exploring more of Rustup’s codebase to see if it is possible to further reduce the overhead of running rustc through its Rustup proxy.

Overall, my experience was extremely positive. While I could have completed some of this work more quickly, I am confident that I will continue to be an active contributor to Rustup and The Rust Project. Thank you!

Acknowledgments

Before closing this chapter for good, I would like to thank a group of people who made this project such a rewarding experience:

- rami3l - Thank you for your patience throughout the summer in answering my endless questions. Your guidance made this experience truly unique, and I learned a great deal from you – not only about writing good Rust code but also about navigating and contributing to a large codebase.

- djc - Thank you for your thorough review comments and for teaching me how to write more idiomatic Rust and avoid common pitfalls.

- Kobzol- Thank you for organizing the entire GSoC experience for The Rust Project. Your feedback during the implementation process was invaluable, helping to catch bugs and inconsistencies in my work.

- nunoplopes - Thank you for suggesting this opportunity to me as an alternative way to spend my summer and for supporting me in delaying our thesis-related work so that I could focus on GSoC.